This article details a simple experiment to test how major search engines (specifically Google, with a look at Bing) crawl and index a webpage built entirely with client-side rendered JavaScript. We'll explore what this means for SEO specialists and front-end developers.

JavaScript is a cornerstone of the modern web. As Google's John Mueller once said, "it's not going away." Rich user interactions and dynamically loaded content are here to stay.

However, JavaScript has always been a challenging topic for SEO. The complexity of JS development and the rendering process, combined with the communication gap between SEOs and developers, often creates uncertainty.

This post moves beyond theory. We'll run a live experiment to see how search engines truly interact with JavaScript-heavy websites.

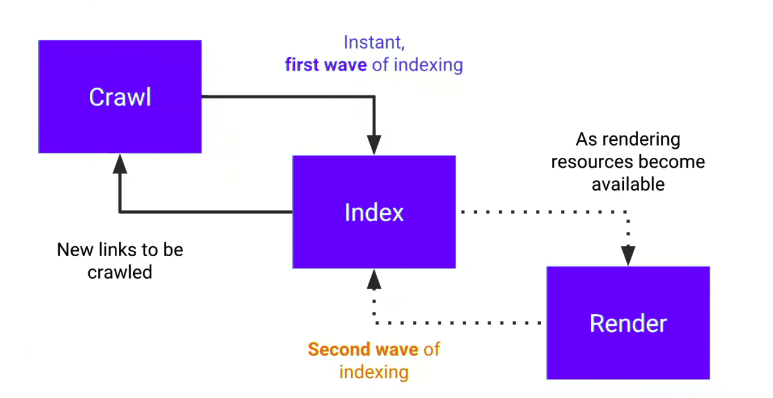

The Two Waves of Indexing

Before we start, it's essential to understand Google's two-wave indexing process:

- First Wave (Crawling & Initial Indexing): Googlebot first crawls the initial HTML source code. If your critical content (titles, text, links) is present in this raw HTML—typically achieved through Server-Side Rendering (SSR) or Static Site Generation (SSG)—it can be indexed quickly.

- Second Wave (Rendering & Full Indexing): After the initial crawl, the page is added to a queue for the Web Rendering Service (WRS). The WRS, using a headless Chrome browser, executes the JavaScript to render the full Document Object Model (DOM). This rendered content is then indexed.

The problem is the significant delay in the second wave. The rendering queue can take anywhere from a few hours to several days, or even weeks. Furthermore, not all search engines have rendering capabilities as robust as Google's.

"If you want your content to be indexed as quickly as possible, make sure the most important elements are in the initial HTML (server-side rendered)." — Martin Splitt, Google

The Experiment: How Do Search Engines Handle Pure JS?

To see this process in action, I created an "extreme" test page. This is not a best practice, but a way to clearly observe search engine behavior.

1. The Test Page Setup

My simple webpage had the following characteristics:

- Minimal Initial HTML: The raw HTML was barebones, with an empty

<title>tag and only a simple<h4>and footer text in the body. - Core Content Rendered Entirely by JS: The page's

<title>,<h1>,<meta name="description">, main article body (generated by GPT-3), and images were all dynamically generated and injected into the page client-side via a JavaScript function. - Content Fetched via AJAX: To add another layer of complexity, the content was fetched from the server via a remote AJAX call.

- Structured Data Injected by JS: An

ArticleSchema.org object was also injected via JavaScript.

1// Simplified Example 2$(document).ready(function () { 3 // Fetch article data via AJAX 4 $.ajax({ 5 url: '/api/get-article-data', 6 success: function(data) { 7 // Use the fetched data to render page elements 8 $('title').text(data.title); 9 $('h1#main-title').text(data.h1); 10 $('meta[name="description"]').attr('content', data.description); 11 $('#article-content').html(data.body); 12 13 // Dynamically inject Schema.org structured data 14 var script = document.createElement('script'); 15 script.type = 'application/ld+json'; 16 script.text = JSON.stringify(data.schemaData); 17 document.head.appendChild(script); 18 } 19 }); 20});

The strategy was to make the JS-rendered content drastically different from the initial HTML. If the search engine results page (SERP) snippet changed, it would be clear that the JavaScript had been processed.

2. Submission and Initial Testing with SeoSpeedup

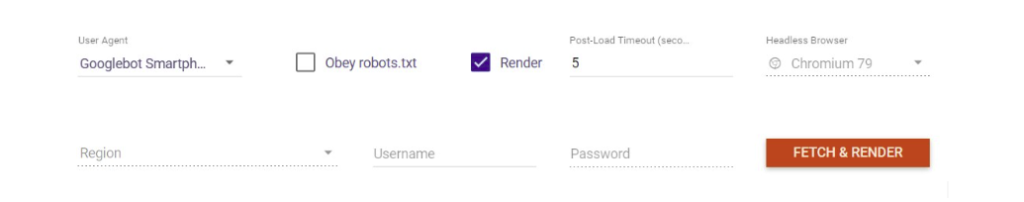

After publishing the page, I submitted the URL through Google Search Console and Bing Webmaster Tools. I then used SeoSpeedup's Site Crawler to validate the setup.

- SeoSpeedup's JavaScript Rendering Test: SeoSpeedup's crawler can be configured to render JavaScript, simulating Googlebot. The test confirmed that our crawler correctly rendered the full page, including all the content loaded via the AJAX call. This is a crucial first step to ensure your site is technically capable of being rendered.

- Google's Rich Results Test: This official tool also successfully generated the rendered DOM and correctly identified the

Articlestructured data that was injected by JavaScript. This confirmed Google could see the content if it rendered the page.

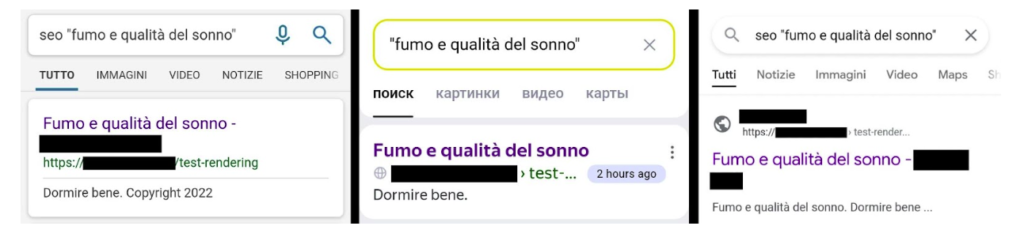

3. The First Wave Indexing Results

A few hours after submission, the page appeared in Google and Bing search results.

Crucially, both search engines showed a title and description derived from the minimal content in the initial HTML source code, not the JS-rendered content. This perfectly demonstrates the first wave of indexing.

4. The Second Wave Results: Success, with a Delay

After a few more days, the results changed:

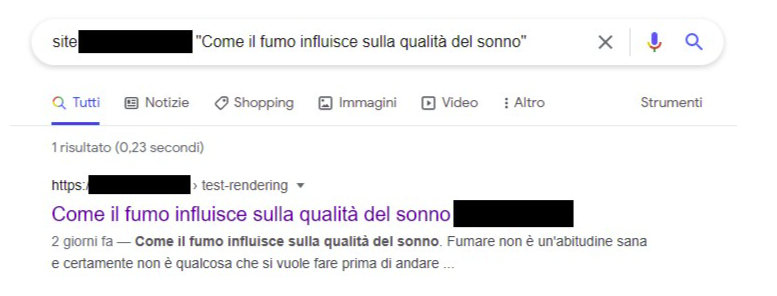

1) Google:

- Successfully rendered the JS and updated the SERP snippet! The search result now showed the title and description that were dynamically generated by JavaScript.

- A

site:search confirmed that Google had indexed the body text rendered by JavaScript. - The primary image rendered by JS was also indexed and appeared in Google Images.

Conclusion: Google can and does process client-side rendered JavaScript, including content fetched via AJAX. However, this relies on the second wave of indexing and involves a delay of several days.

2) Bing:

- Throughout the entire testing period, Bing's SERP snippet never changed. It continued to display the content from the initial HTML.

Conclusion: Bing (at least in this test) failed to effectively process and index the core content rendered by client-side JavaScript.

The Solution for Broader Compatibility: Dynamic Rendering

Since Bing struggled, I tested a solution: Dynamic Rendering. This involves configuring the server to detect the user agent.

- If it's a regular user's browser, serve the normal JavaScript-heavy page.

- If it's a search engine bot (like Bingbot), serve a pre-rendered, static HTML version of the page.

After configuring dynamic rendering, I requested re-indexing from Bing. While the test period ended before a change was observed, this approach provides bots with a fully-formed HTML document, bypassing their need to render JS. It's an effective (though complex) solution for ensuring compatibility with less advanced crawlers.

Key Takeaways and Recommendations

This experiment clearly demonstrates:

- Google can handle client-side JS, but there's a delay. For time-sensitive content like news or events, relying purely on client-side rendering (CSR) is risky.

- Other search engines, like Bing, lag significantly behind. If these engines are important for your traffic, a pure CSR approach is not viable.

Recommendations for Developers and SEOs:

- Use SSR or SSG for Critical Content. Your page's

<title>,<meta description>, H1, primary text, and main navigation should always be present in the initial HTML source. Modern frameworks like Next.js (for React) and Nuxt.js (for Vue) make this highly achievable and should be the default choice for SEO-critical websites. - Avoid Pure Client-Side Rendering (CSR) for Core Content. Even if you only care about Google, you must accept the indexing delay. For broader search engine compatibility, it's a non-starter.

- Use SeoSpeedup to Monitor Your Rendering. After deploying changes, run a crawl with JavaScript rendering enabled in SeoSpeedup. Check the rendered HTML to ensure your critical content is visible. Use the GSC integration to "Inspect URL" and verify that Google's view of the page matches your expectations.

"Is the complexity of introducing SSR/SSG into a project worth it? The answer is simple: Yes, if you want to deliver an excellent user experience while ensuring your SEO is rock-solid."

Embrace modern front-end technologies, but always remember to give search engines a clear, easily accessible path to your content.